Project Aria is a brand new research initiative from Facebook and Ray-Ban in order to build smart glasses that incorporate augmented reality and artificial intelligence. In this guide, we go through everything we know about Aria, including:

- What does the design look like?

- What smart features do the glasses have?

- What are some possible use cases?

- When will Facebook release Project Aria or other AR glasses to the consumer?

If you want to know anything else, make sure you comment down at the bottom of this guide!

Latest Update

Facebook and Ray-Ban announced a special announcement about their smart glasses on September 9th. The news comes right after Facebook VP Andrew Bosworth previewed a few videos that supposedly were taken using the smart glasses. You can view those below.

Project Aria Overview

Project Aria is a pair of smart glasses being used as a research device by Facebook Reality Labs. Its goal is to determine what consumer augmented reality smart glasses actually need. Facebook partnered with Ray Ban on a multi-year partnership to place technology inside of their glasses.

Beginning in September of 2020, a few hundred Facebook employees and contractors will be wearing the Aria smart glasses on campus and in public spaces around San Francisco. After analyzing the research, Facebook is committing to announcing its first consumer smart glasses in fall of 2021.

Below, we break down the Project Aria smart glasses in full detail even more!

Sensors

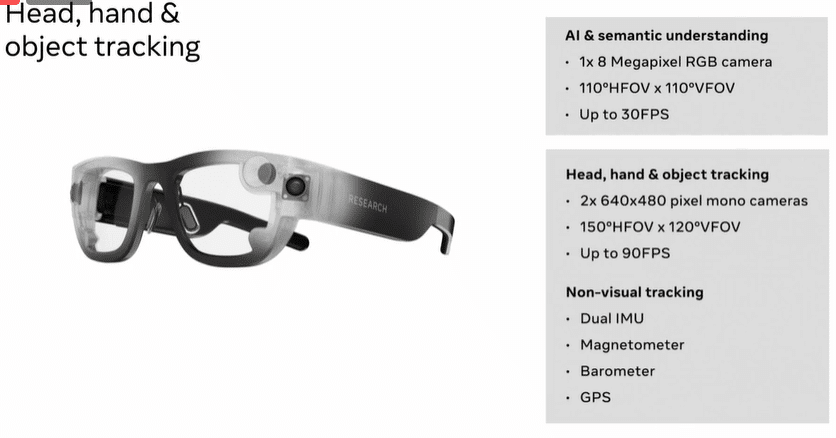

The Facebook Aria glasses are packed full of sensors and technology designed to capture data about each wearer’s usage. An 8 megapixel camera with 110 degree field of vision and 30 frames-per-second video capture is included to understand how the glasses are being used.

2 lower quality cameras are used for near-sight video. They have a 150 degree field of view and a 90 frames-per-second capture rate. These cameras watch for hand movements and nearby object tracking.

For other sensors in Project Aria, there is a magnetometer, barometer, GPS chip, and two inertial measurement units (IMU) to keep track of position and motion.

What is Project Aria’s Release Date?

The Aria smart glasses do not have a release date and are not meant for consumer purchase. Unlike the new Oculus Quest 2, they are meant for research purposes by Facebook only. The soonest consumer headset is late 2021 at the latest. We expect an update around the time of Facebook Connect 8 in September of 2021.

Purpose of the Project

The company is using data from the glasses to determine exactly how augmented reality glasses would be worn and used by the general public.

What if you didn’t need to look down at your phone to get real-time data and information? Imagine 3D glasses that could give you information in your line of sight. This simple possibility is the purpose of the Aria project.

The Facebook Reality Labs team will gather information on how to use and wear glasses, what components are important, how to create something weather proof and durable, plus more. Project Aria is about figuring out the privacy, safety and policy model, before real AR glasses are brought to the world.

If you have some privacy concerns about the entire project, Facebook has addressed those! See what they said in the next section.

Principles of the Project

Project Aria has a few basic principles that Facebook is focusing on:

- Never surprise people

- Controls that matter

- Consider everyone

- Put people first

Per Facebook, “trust is earned and not given” and Aria is a chance to redeem themselves and hold that philosophy true.

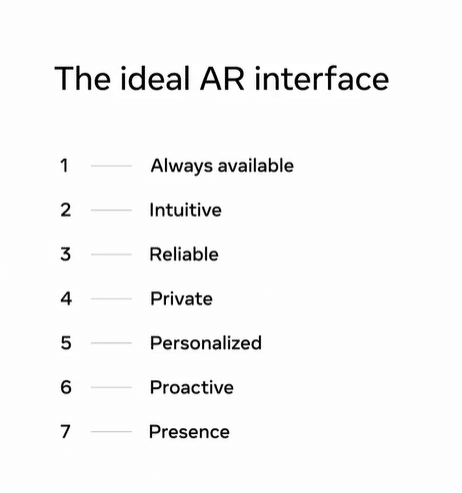

The Ideal AR Glasses Interface

In advance of the research project, Facebook has already outlined what they believe to be the ideal interface for augmented reality smart glasses:

What do these factors mean? Are they features you want in a pair of smart glasses?

Always Available

Because AR glasses sit on your face, they need to be always providing you with relevant and contextual information. Sometimes you will want to disable augmented reality and smart features, such as when you are in business meetings. However when you need something, you need an easy way to bring your glasses back into focus.

Intuitive

Just like the keyboard or the phone interface, using smart glasses needed to be second nature to you. Without a controller or interface device, launching and using apps and games needs to be easy.

Reliable

If you have to ability to see the world with smart lenses, then your AR glasses need to be reliable. This means that your glasses need access to tons of information from the cloud in real-time. And all of this information needs to be ready when you need it, while still keeping battery life acceptable.

Private

Smart glasses need to be private, so that what you see isn’t transmitted anywhere. They show a view that is more sensitive than what is on your personal phone, so privacy settings should be adjusted to reflect that.

Personalized

With object recognition and an idea about your personal interests, smart glasses should provide you with a personal experience. Facebook showcased some app concepts that lead to a more personalized product:

- View how many calories are in the food you are eating in real-time

- Information about plants and trees on the street in public

- Real-time score updates for your favorite teams

Proactive

Unlike your phone which only provides information when you prompt it, smart glasses can be proactive. Data and information can be pushed to the display in your line of sight whenever the glasses know it is relevant.

Presence

Smart glasses should understand the context of where you are in the real world. This will allow it to be present when you need it, but hidden during sensitive or non-important times.

Do you agree with these interface guidelines in a pair of smart glasses? What special ideas or features would help contribute to this idea?

Areas of Focus

In addition to all of the theory above, Facebook also showcased some areas of focus for the Project Aria research team. They include pilot examples and specific real-world examples that apply these areas:

- Input & Output

- Machine Perception

- Interaction

Input and Output

How you interact with smart glasses and get results out is really important. At Facebook Connect, they showcased an electromyography (EMG) wristband, which records electrical activity in muscle tissue.

EMG has the ability to coordinate hand movement with accuracy down to 1 millimeter. The concept is like hand tracking on steroids! In another demo, Facebook shows how EMG can also virtually add a sixth finger and be controlled through muscle memory in augmented reality.

With this accuracy, what features could smart glasses add?

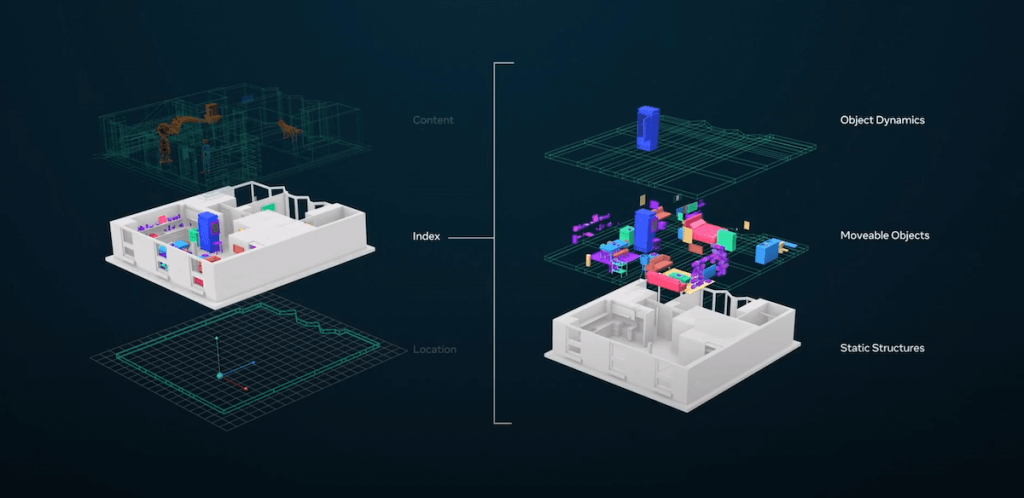

Machine Perception

Machine Perception means mapping out the real world accurately no matter where you are. The world is locked in place with persistent objects. A common coordinate system shows exactly where you are in relation to these objects.

Livemaps is a platform that contains location information and index information of your personal current space. It also contains content information, which is the location of virtual objects in the real world

The content information is very important – the details about your life, data from your phone and calendar. Contextual information is important to only you.

If smart glasses can perceive where you are and overlay the content information about your live, it can provide a deeply personal experience.

Interaction

Interaction with other people is key to our lives and smart glasses have the possibility to improve this experience. Facebook showed off a demo that involved having smart glasses filter out noise in a loud environment in real time. This would allow you to talk to your friend with smart glasses in regular volume.

Smart glasses involve interaction more than smart phones and computers do. This part of life needs to be captured right in AR glasses.

What do you think about the Project Aria smart glasses? What features would you like to see in a pair of AR glasses? Comment below and let us know!